Der Programmier-Thread

- Thread starter chrizel

- Start date

-

Spielt gerade: GT7 | 60fps FTW

Let’s say you ask your programming language to do the simplest possible task: print out “hello world”. Generally this takes two syscalls: write and exit.

...

Most languages do a whole lot of other crap other than printing out “hello world”, even if that’s all you asked for.

...

Most languages do a whole lot of other crap other than printing out “hello world”, even if that’s all you asked for.

https://drewdevault.com/2020/01/04/Slow.html

And hidden therein is my actual point: complexity. There has long been a trend in computing of endlessly piling on the abstractions, with no regard for the consequences. The web is an ever growing mess of complexity, with larger and larger blobs of inscrutable JavaScript being shoved down pipes with no regard for the pipe’s size or the bridge toll charged by the end-user’s telecom. Electron apps are so far removed from hardware that their jarring non-native UIs can take seconds to respond and eat up the better part of your RAM to merely show a text editor or chat application.

The PC in front of me is literally five thousand times faster than the graphing calculator in my closet - but the latter can boot to a useful system in a fraction of a millisecond, while my PC takes almost a minute. Productivity per CPU cycle per Watt is the lowest it’s been in decades, and is orders of magnitude (plural) beneath its potential. So far as most end-users are concerned, computers haven’t improved in meaningful ways in the past 10 years, and in many respects have become worse. The cause is well-known: programmers have spent the entire lifetime of our field recklessly piling abstraction on top of abstraction on top of abstraction. We’re more concerned with shoving more spyware at the problem than we are with optimization, outside of a small number of high-value problems like video decoding.1 Programs have grown fat and reckless in scope, and it affects literally everything, even down to the last bastion of low-level programming: C.

The PC in front of me is literally five thousand times faster than the graphing calculator in my closet - but the latter can boot to a useful system in a fraction of a millisecond, while my PC takes almost a minute. Productivity per CPU cycle per Watt is the lowest it’s been in decades, and is orders of magnitude (plural) beneath its potential. So far as most end-users are concerned, computers haven’t improved in meaningful ways in the past 10 years, and in many respects have become worse. The cause is well-known: programmers have spent the entire lifetime of our field recklessly piling abstraction on top of abstraction on top of abstraction. We’re more concerned with shoving more spyware at the problem than we are with optimization, outside of a small number of high-value problems like video decoding.1 Programs have grown fat and reckless in scope, and it affects literally everything, even down to the last bastion of low-level programming: C.

That’s the true message I wanted you to take away from my article: most programmers aren’t thinking about this complexity. Many choose tools because it’s easier for them, or because it’s what they know, or because developer time is more expensive than the user’s CPU cycles or battery life and the engineers aren’t signing the checks.

Zuletzt editiert:

Das ist schon richtig, aber das Entwickeln von Hello World Applikationen ist ein verschwindend geringer Anteil von meiner Arbeit.

Wenn man das, was ich so teilweise in Java (Groovy, Kotlin) entwickelt habe, in Assembler oder auch nur in optimiertem C geschrieben hätte, wäre der Entwicklungsaufwand (und damit auch die Fehlerwahrscheinlichkeit) ein sehr hohes Vielfaches davon gewesen. Mit Pferdekutschen kommt man auch zur Arbeit, und es ist wahnsinnig viel umweltfreundlicher, macht aber irgendwie auch niemand mehr heutzutage...

Wenn man das, was ich so teilweise in Java (Groovy, Kotlin) entwickelt habe, in Assembler oder auch nur in optimiertem C geschrieben hätte, wäre der Entwicklungsaufwand (und damit auch die Fehlerwahrscheinlichkeit) ein sehr hohes Vielfaches davon gewesen. Mit Pferdekutschen kommt man auch zur Arbeit, und es ist wahnsinnig viel umweltfreundlicher, macht aber irgendwie auch niemand mehr heutzutage...

Und man darf nicht vergessen, dass es den meisten Applikationen auch egal ist. Die Anforderungen sind nicht so groß, dass man sich über solche Sachen Gedanken machen muss.

Wobei man halt auch aufpassen muss nicht ins verschwenderische abzudriten. Insbesondere dank der Cloud ist es dann einfacher noch paar Instanzen zu kaufen als mal den Code ein bisschen zu optimieren.

Wobei man halt auch aufpassen muss nicht ins verschwenderische abzudriten. Insbesondere dank der Cloud ist es dann einfacher noch paar Instanzen zu kaufen als mal den Code ein bisschen zu optimieren.

Sagt mal, ich bräuchte in SQL eine Sortierung einer Tabelle von neu nach Alt. Aber in einer Spalte gibt es mehrere gleiche Aktenzeichen. Ich hätte gerne die Aktenzeichen zusammen. Diese von Alt nach neu sortiert und insgesamt jede Gruppe von Aktenzeichen von Alt nach Neu sortiert.

Ich habe einen subquery für die Sortierung von neu nach Alt insgesamt. Aber wie ich die Aktenzeichen zusammenbringe und dann noch richtig sortiere, da stehe ich auf dem Schlauch.

Ich habe einen subquery für die Sortierung von neu nach Alt insgesamt. Aber wie ich die Aktenzeichen zusammenbringe und dann noch richtig sortiere, da stehe ich auf dem Schlauch.

Sagt mal, ich bräuchte in SQL eine Sortierung einer Tabelle von neu nach Alt. Aber in einer Spalte gibt es mehrere gleiche Aktenzeichen. Ich hätte gerne die Aktenzeichen zusammen. Diese von Alt nach neu sortiert und insgesamt jede Gruppe von Aktenzeichen von Alt nach Neu sortiert.

Ich habe einen subquery für die Sortierung von neu nach Alt insgesamt. Aber wie ich die Aktenzeichen zusammenbringe und dann noch richtig sortiere, da stehe ich auf dem Schlauch.

Ich habe einen subquery für die Sortierung von neu nach Alt insgesamt. Aber wie ich die Aktenzeichen zusammenbringe und dann noch richtig sortiere, da stehe ich auf dem Schlauch.

id Aktenzeichen created-at

301 432 2022-03-14

300 123 2022-03-12

299 321 2022-03-09

298 123 2022-02-14

297 654 2022-02-13

296 123 2022-01-20

Ich migriere Daten in neue table. Anstatt das das AZ wieder hier abhängt gibt es eine Akte, die das AZ trägt. Da wandern auch einige andere Attribute hin, die für alle Objekte gleich sind.

Dafür gehe ich durch alle Daten und lege einmal die neue Akte an und dann update ich diese mit den Werten aus den neueren mit gleichem AZ.

Das würde ganz normal gehen, wenn ich die Daten von Alt nach Neu durchlaufe, d.h. obiges Beispiel von unten nach oben. Ich will die alten Daten zuerst einfügen und dann mit Änderungen der neueren Überschreiben.

Weil das aber Hunderttausende Einträge sind, will ich genre bei der Migration erst die neuen Daten migrieren, bis zu den alten zurück. Die alten Daten sind nicht so relevant nur zu Archivzwecken.

Das heißt ich würde so gerne von unten nach oben durchlaufen

297 654 2022-02-13

299 321 2022-03-09

300 123 2022-03-12 - zweit neuester

298 123 2022-02-14 - gleiches AZ, aber älter

296 123 2022-01-20 - gleiches AZ, aber älter

301 432 2022-03-14 - neuester

Bei der Migration hängt ein Rattenschwanz von Relationships und Businesslogik mit dran, deswegen ist das kein einfaches rumkopieren.

301 432 2022-03-14

300 123 2022-03-12

299 321 2022-03-09

298 123 2022-02-14

297 654 2022-02-13

296 123 2022-01-20

Ich migriere Daten in neue table. Anstatt das das AZ wieder hier abhängt gibt es eine Akte, die das AZ trägt. Da wandern auch einige andere Attribute hin, die für alle Objekte gleich sind.

Dafür gehe ich durch alle Daten und lege einmal die neue Akte an und dann update ich diese mit den Werten aus den neueren mit gleichem AZ.

Das würde ganz normal gehen, wenn ich die Daten von Alt nach Neu durchlaufe, d.h. obiges Beispiel von unten nach oben. Ich will die alten Daten zuerst einfügen und dann mit Änderungen der neueren Überschreiben.

Weil das aber Hunderttausende Einträge sind, will ich genre bei der Migration erst die neuen Daten migrieren, bis zu den alten zurück. Die alten Daten sind nicht so relevant nur zu Archivzwecken.

Das heißt ich würde so gerne von unten nach oben durchlaufen

297 654 2022-02-13

299 321 2022-03-09

300 123 2022-03-12 - zweit neuester

298 123 2022-02-14 - gleiches AZ, aber älter

296 123 2022-01-20 - gleiches AZ, aber älter

301 432 2022-03-14 - neuester

Bei der Migration hängt ein Rattenschwanz von Relationships und Businesslogik mit dran, deswegen ist das kein einfaches rumkopieren.

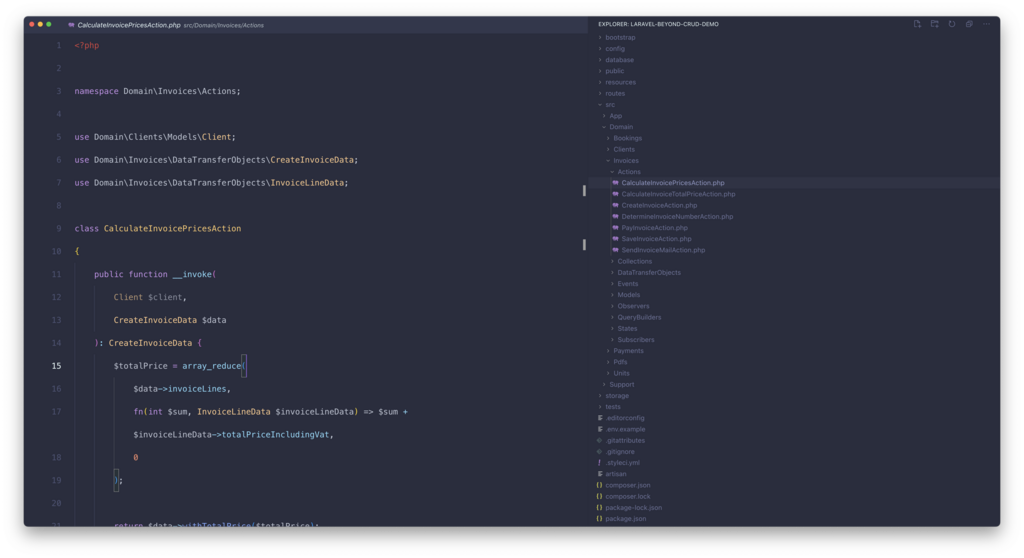

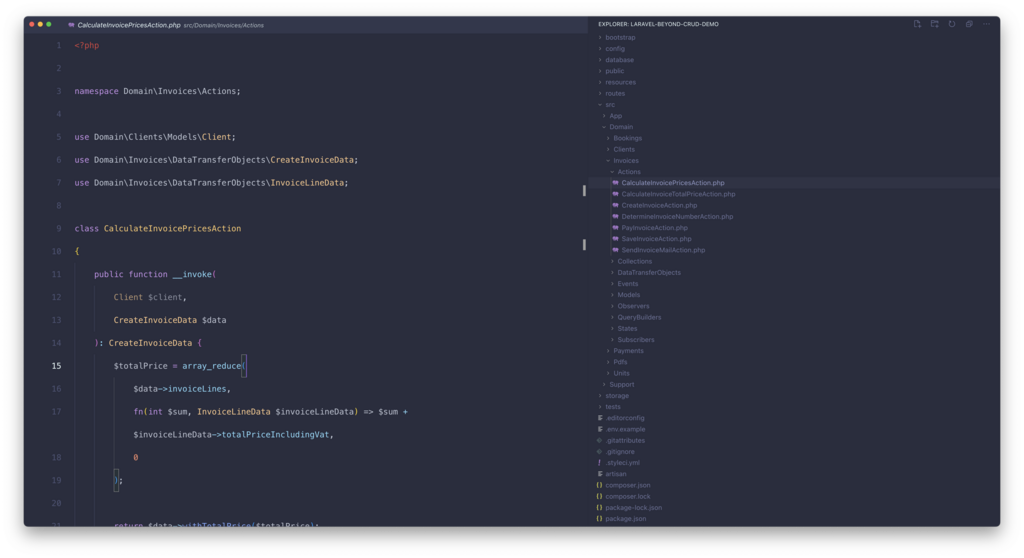

Hey, habe durch die Firma dieses Buch in die Hände bekommen

https://makevscodeawesome.com/launch

und ich muss sagen, VSCode ist um einiges geiler geworden. Ich habe nicht alle UI Änderungen mitgemacht, z. B. Highlighte ich weiterhin gerne die Änderungen im Git, aber alter Falter, nachdem ich viele der Shortcuts drin habe ist es echt angenehmer. Mit VIM komm ich nicht klar, aber in dem Maß gefällt es mir.

Kurz gesagt blendet man alles aus, auch den File Explorer in dem Screen unten und holt sich alles mit Shortcuts rein und raus. Das geht gut von der Hand, wenn man mal damit anfängt.

Und was ich jetzt erst gelernt habe, wenn man CMD+P mehrmals drückt wechselt man direkt durch die Dateien, ich habe das immer einmal gedrückt und bin dann mit den Pfeiltasten runter zur Datei die ich gebraucht habe.

Erst seit Wechseln meines Jobs arbeite ich dauerhaft an einem Mac und meine Kollegen erklären einem wie gut Alfred ist, aber seitdem ich eine Clipboard History habe, gehört das zu den Werkzeugen, ohne die man als Entwickler gar nicht leben kann. Wenn die jemand nicht nutzt beim Pairing dreh ich durch.

Kann ich beides nur empfehlen, wenn ihr euch in VSCode bewegt und noch keine Clipboard History habt.

https://makevscodeawesome.com/launch

und ich muss sagen, VSCode ist um einiges geiler geworden. Ich habe nicht alle UI Änderungen mitgemacht, z. B. Highlighte ich weiterhin gerne die Änderungen im Git, aber alter Falter, nachdem ich viele der Shortcuts drin habe ist es echt angenehmer. Mit VIM komm ich nicht klar, aber in dem Maß gefällt es mir.

Kurz gesagt blendet man alles aus, auch den File Explorer in dem Screen unten und holt sich alles mit Shortcuts rein und raus. Das geht gut von der Hand, wenn man mal damit anfängt.

Und was ich jetzt erst gelernt habe, wenn man CMD+P mehrmals drückt wechselt man direkt durch die Dateien, ich habe das immer einmal gedrückt und bin dann mit den Pfeiltasten runter zur Datei die ich gebraucht habe.

Erst seit Wechseln meines Jobs arbeite ich dauerhaft an einem Mac und meine Kollegen erklären einem wie gut Alfred ist, aber seitdem ich eine Clipboard History habe, gehört das zu den Werkzeugen, ohne die man als Entwickler gar nicht leben kann. Wenn die jemand nicht nutzt beim Pairing dreh ich durch.

Kann ich beides nur empfehlen, wenn ihr euch in VSCode bewegt und noch keine Clipboard History habt.